Revising the concept of Human Language ability, or communicative competence, in an LLM age

Human Language Model vs Large Language Model – Similarities, differences, and complementarities

(This article is largely a part of longer and more academic research article, the draft of which is on Research Gate)

Generative AI Large Language Models (LLMs) are changing how we generate and interact with text. The rapid development of LLMs has sparked as much excitement as confusion.

People often assume LLMs have human-like capabilities because of the fluent text they produce. After all, language is probably most fundamental to what it means to be human. Another source of confusion comes from, as Rob Nelson points out, engineering language that describes LLMs with human terms like "neural networks," "attention," and "understanding.”

And then there are many others who believe that an LLM is radically different from a human language model (HLM) in its capacity to analyze and generate language.

The truth, as usual, is somewhere in between.

Let’s look at the similarities and differences between how LLMs and humans generate language and clear up some common misconceptions. It turns out that these differences are complementary and can inspire new hybrid ways of looking at language competence – for both humans and LLMs.

Kahneman’s 2-system model: Thinking, fast and slow

To better understand both the similarities and differences between an LLM and HLM, Daniel Kahneman’s (2011) two systems of thinking provide a useful framework. System 1 operates like an autopilot that is fast, instinctive and preconscious. It is used for everyday decisions and also in threatening situations when a fight-or-flight action needs to be immediate. System 2, on the other hand, is analytical—it is slow, deliberate, and effortful. It is used for complex problems.

Both an LLM and HLM rely on recognizing patterns to produce language. For LLMs, this means using vast amounts of data and compute power to predict the next word in a sequence. This process is fast and automatic. Similarly, when human native speakers speak, words often rapidly assemble themselves without conscious thought. The language seems to speak itself. This process resembles Kahneman’s concept of System 1 thinking.

However, when human communication is more complex and involves formulating arguments or writing a story or report, the mental process demands more than associations and self-assembling words. Here, humans use hierarchical thinking—a feature tied to our attention, working memory, and consciousness. This involves planning, revising, and adapting language based on context, purpose, and audience. Unlike the sequential processing in LLMs and much of everyday human conversation, hierarchical thinking allows humans to craft complex texts and maintain coherence over extended narratives. This integrates higher-order cognitive processes known as System 2 thinking.

In the following sections, we’ll explore these similarities and differences in detail. We’ll use the Communicative Competence framework to highlight how humans' hierarchical reasoning shapes our language use, which contrasts with the sequential approach of LLMs. This comparison will also clarify why, despite impressive capabilities, LLMs still don’t truly “understand” language in the way humans do. And why LLMs will never lead to AGI, as Meta’s chief AI Scientist Yan LeCun argues.

Similarities: Sequential reasoning, next word prediction, and System 1 thinking

Humans and Large Language Models (LLMs) share some fundamental processes when it comes to generating language. At their core, both rely on recognizing patterns to produce coherent text or speech.

Let’s start with sequential reasoning, a mode of operation both humans and LLMs use. As a native or high ability language speaking human, your everyday conversation often feels like it flows effortlessly. You respond automatically, without pausing to consider each word. This aligns with what psychologist Daniel Kahneman calls System 1 thinking: fast, automatic, and intuitive.

When someone asks, "What’s up?", you instantly reply, “Not much. What’s up with you?”. You did not plan that answer. Your brain quickly pulls from a mental database of phrases and context clues and generates a response almost instantly. This pattern recognition is preconscious. The importance of frequency, repetition, and patterning in language mastery is a statistical view of human language and helps explain why it is easy to process and use language for highly proficient speakers.

LLMs operate like this. They’re designed to predict the next word in a sequence based on statistical patterns in their training data. When you start a new conversation with ChatGPT with “what’s up?” It will also give you a response like it did for me: “Not much, just here to assist you. What’s up with you?”

This is about the only valid rejoinder to “what’s up?” in English. In it’s training data, the phrase following “what’s up?” is invariably, “not much.” This is how LLMs work: they calculate the conditional probabilities in sequences of vast amounts of relevant text.

When an LLM trained on trillions of English words is asked to complete the phrase “The cat sat on …” and “continue the story”, it predicts that a noun will follow the verb “sat” and that an article “the” will precede it. The noun is calculated as a probability as a word commonly associated with things a “cat” will “sit” on, like “windowsill” or “mantle” because that’s a common continuation it has identified from its training set.

At the word-level prediction, the model uses conditional probabilities to predict that "windowsill" is a likely word to follow "the" in this context. (Though I regenerated the prompt several times with different nouns each time: windowsill, mantle, porch, antique trunk). Based on its huge dataset, the model identifies the statistically high series of collocations (i.e., words that frequently appear together) of cat-sat-windowsill:

The cat sat on the windowsill,

At the sentence-level, the model creates coherence as it searches through its database for not just words like cat, sat, and windowsill, but also the genre of a “story” from the prompt, and how they generally unfold, one word at a time. So, to continue the story, the model identifies the word patterns that make up narrative structures and added "staring out into the darkened streets." This follows logically, i.e., probabilistically, and continues the narrative structure, as a cat sitting somewhere is usually looking around, especially if it is sitting by a window.

The cat sat on the windowsill, staring out into the darkened streets.

In terms of paragraph and story development, the LLM will first provide some background and context – a cat, nighttime, quiet streets:

The cat sat on the windowsill, staring out into the darkened streets. Its sleek fur glistened under the soft glow of the lamp, casting a shadow against the curtain. Outside, the town was quiet, save for the occasional rustling of leaves and distant hum of traffic.

The protagonist, Jane, is introduced in the next paragraph. The LLM’s next word prediction is focused on clues from the prompt, which in this case is a story. At some point near the beginning of a story, the protagonist will reveal her problem or goal, which in this story involves a “but”: “She was searching for a particular letter... but … ”

In the room behind the cat, Jane sifted through a stack of old letters. Each one, a time capsule of memories and forgotten dreams, carried a faint scent of lavender and ink. She was searching for a particular letter, one that held a promise she made years ago, but in the process, she unearthed fragments of her past she had long buried.

Since the story starts off with “The cat”, the LLM keeps story structure and coherence by bringing the “cat” back in the third paragraph. The cat “senses Jane’s unease” and moved towards her to comfort her.

The cat turned its head, sensing Jane's unease. With a soft meow, it jumped down from the windowsill and padded over to her. Its green eyes seemed to reflect the flickering flame of the candle on the desk. Jane looked up, her fingers trembling as she unfolded the last letter.

As the story progresses, the model’s probabilistic constraints (usually) ensure that all the sentences and paragraph support the central storyline—in this case about a cat and consoling behavior toward its troubled owner. It avoids introducing unrelated topics or inconsistent details that would break the story coherence.

Still, since the operation is essentially a next-word prediction mechanism based on probabilities, the LLM will never produce the same response twice. And sometimes the probability calculations may deviate from coherence, and oftentimes from factual representations. The result are fabrications or hallucinations: linguistically coherent (and therefore convincing) but not factually coherent. This means it also requires the human-in-the-loop to determine whether the response was adequate or whether it requires further adjustments and iterations by prompting. The LLM is essentially guessing a response and it is up to the human to determine if that guess was correct or sufficiently completed.

Differences: Hierarchical reasoning, Communicative Competence, and System 2 thinking

While humans and Large Language Models (LLMs) share a knack for pattern recognition, their differences become striking when we look at how they generate complex language texts like marketing copy or a letter of complaint. Here’s where the human System 1 next word prediction language ability becomes overlayed with and overshadowed by System 2 hierarchical reasoning in the operation of “communicative competence”.

What is Communicative Competence?

For centuries up until the late 1960’s, “language” was seen mainly as grammar and vocabulary. This view was influentially formulated by Noam Chomsky in his idea of linguistic competence, which focused on our ability to understand and produce grammatically correct sentences. This remained the dominant view of language in linguistics into the 2000s.

However, sociolinguists like Dell Hymes in the later 1960s challenged that view and argued that real-world “communication” is much more nuanced. He expanded the idea of linguistic competence to communicative competence. In other words, effective communication means knowing how to use language (i.e., communicate) appropriately in various contexts. What is “appropriate” in one context may not be appropriate in another: “What’s up?” may be ok when your greet your friend, but it is typically not appropriate when you are greeting your potential manager at a job interview.

Components of Communicative Competence

From the 1970’s to 2000s, a number of competing communicative competence frameworks emerged. But most of them contained five or six sub-competences.

1. Linguistic Competence: Basic knowledge of grammar and vocabulary.

2. Formulaic Language Competence: The use of fixed phrases and idioms that make communication natural.

3. Discourse Competence: The ability to organize sentences into coherent texts or conversations, like a high-school essay or sales presentation.

4. Socio-Cultural Competence: Knowing how to adjust language based on social and cultural norms.

5. Interactional Competence: Managing interactions effectively, including turn-taking and body language.

6. Strategic Competence: Using strategies to overcome communication challenges or enhance clarity.

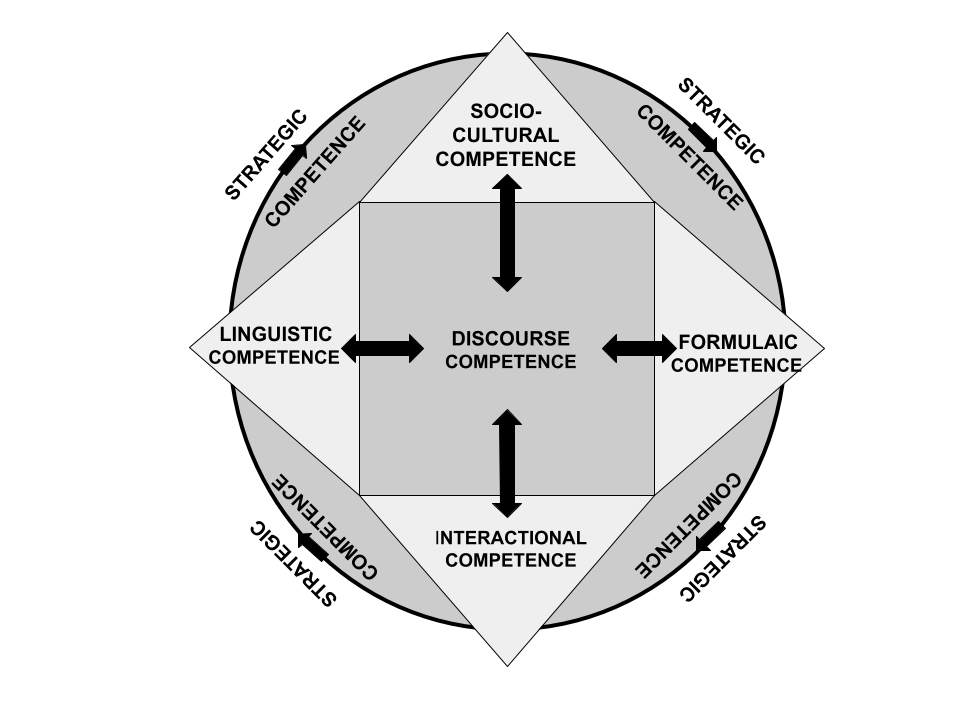

I particularly appreciate Celce-Murcia’s 2007 model because it places discourse competence at the center, giving it a core role as a communication organizer (See Figure 1). Similar to Jungian archetypes, discourse competence involves understanding the communicative purpose and shared narrative structure of texts like stories, as well as more mundane ones like emails, reports, and birthday greetings.

Figure 1. Celce-Murcia’s schematic diagram of communicative competence.

With discourse competence at the center, it integrates and mediates the five other competences:

1. Linguistic forms of vocabulary and grammar,

2. Formulaic language of idioms, phrases, and chunks,

3. Top-down socio-cultural pragmatic considerations about appropriate language for the context,

4. Bottom-up interactional verbal and non-verbal cues for personal interactions, and

5. Strategies to compensate for, or repair, other competences that are insufficient.

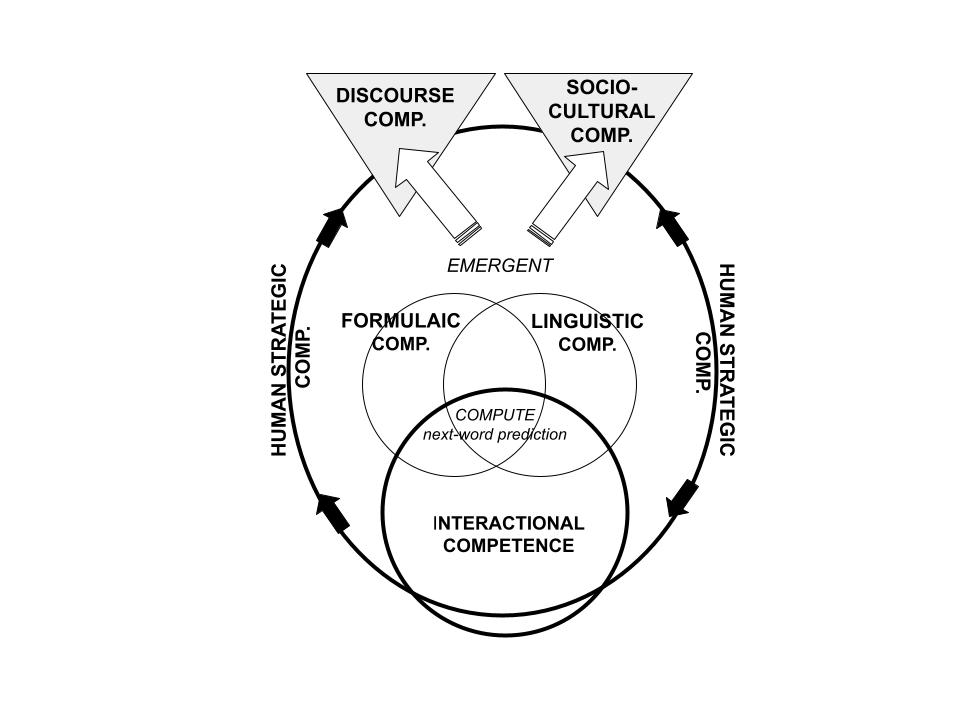

This view of human language competence is much more complex than the language calculator LLM. With massive amounts of computational power, LLM chatbots rely on the conditional probabilities associated with the three bottom-up “competences” of linguistic and formulaic language and interactional, where “interactional” means the cues from the human who prompts the LLM with a language command.

So how is this different than an HLM?

As Figure 2 shows, this means, remarkably, that LLM linguistic and formulaic competences are sufficient for language production, relegating socio-cultural and discourse competences as unnecessary. The latter two competences are essentially emergent properties from next word prediction calculations, as we saw from the generated cat story that, while not very engaging, followed a clear and coherent story discourse structure.

Figure 2. [ND1] A diagram of an LLM’s “communicative” competence.

The decidedly non-human way that LLMs achieve discourse competence is truly noteworthy. Their brute force parallel-processing and sequential reasoning obviates the need for conscious top-down processes to impose discourse structures and socio-culturally appropriate language for a given social context. LLMs are truly marvels of technology, and while they can offer a glimpse of the subconscious processes of real-time human communication, they offer little insight into how the human mind works with top-down processes that are more conscious.

Also, given that LLMs like ChatGPT are essentially omniscient in terms of human knowledge and language, they have no need for strategic competence in the traditional sense to make up for a lack of linguistic resources or knowledge.

Complementarities: A hybrid, centaur model of communicative competence

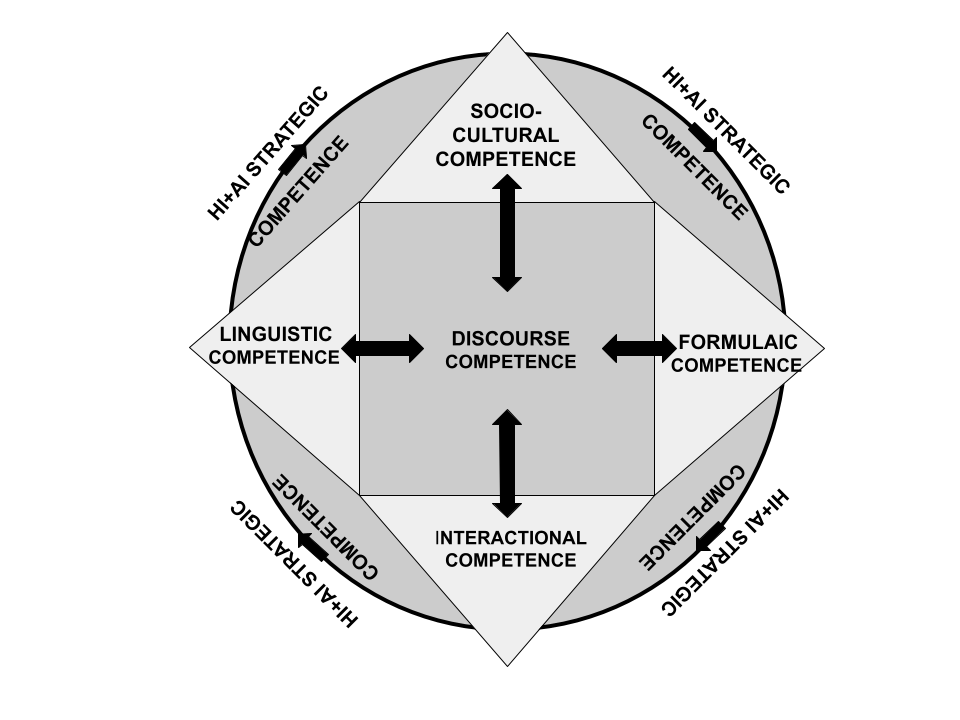

Of course, the whole notion of assigning communicative competence to an LLM is problematic since it is entirely responsive to the human user and not proactive. LLMs are not agents. But if we continue the analogy for didactic purposes, any notion of strategic competence would need to be redefined so as to shift the role of strategic competence to the human user who needs to verify that the output is correct and appropriate for the context. That is why Human strategic competence, not strategic competence, is labelled in the figure.

The notion of communicative competence is here redefined along the lines of what Mollick describes as “centaur” division of labor, where the human “switches between AI and human tasks, allocating responsibilities based on the strengths and capabilities of each entity.” In this way, if the Human user notices the LLM generating hallucinations or inaccurate outputs, she must be ready to correct the potential flaws in its emergent socio-cultural and discourse competences from training biases or randomness from probability calculations gone awry. The human’s System 2 hierarchical reasoning plays a pivotal role for not only the LLM’s interactive competence (prompt planning for the input) but also its strategic competence (analytically verifying the output). The quality and complexity of the LLM output is necessarily a function of the human user’s competence.

But this relationship is reciprocal.

When humans interact with other humans, the LLM can also become a strategic tool to enhance human communicative competence. The LLM’s System 1 sequential reasoning can be leveraged, especially with the real-time voice mode and translation capabilities of LLMs like ChatGPT-4o which can leveraged as a strategic competence in human communication. This can apply to both synchronous speaking and asynchronous writing contexts.

Here we can revise the above human communicative competence model to replace strategic competence with HI+AI strategic competence. LLMs can be used to compensate for, or repair, other competences that are insufficient.

Figure 3. Revised version of Celce-Murcia’s (2007) communicative competence model that includes AI as a strategic competence.

In real-time conversations, for example, strategic competence is used when you can’t find the word you need, and you have to explain using other words or body language to make up for the lack of linguistic or formulaic language competences. With an LLM in your hand-held smartphone, it can act as a strategic enhancement to the human’s communicative competence. And as can be seen in the revised model in Figure 3, strategic competence can mediate not only linguistic competence, but all the other competences, too. When creating longer and more complex communications, such as in the international sales communications, LLMs can be used as a strategic competence to compensate for gaps in discourse competence (how to write an effective product description for a LinkedIn product page) or socio-cultural competence (how to design a product pitch for potential Saudi customers).

Future workflows

The linguistically masterful LLM language calculators are changing how many of us generate and interact with text – especially in the fields of education, research, sales, marketing and programing. New hybrid and centaur workflows are emerging that can leverage both the language calculation strengths of fast-thinking LLMs and the hierarchical reasoning strengths of slow-thinking humans.

However, the challenge is to develop the procedures for optimal workflows that emphasize the key role of the human and the knowledge and thinking practices that help the human not only guide the LLM but leverage the LLM to enhance distinctly human capacities, such as creativity and empathy. In the mythical centaur, the human head/torso and horse body are complementary, but the human part guides the powerful horse part.

Optimal workflows should be a major goal of education and professional training that involves generative AI: clarify and develop human strengths and deploy gen AI tools to amplify them and not relegate them to the back seat. It would also be useful to devise metrics for measuring workflows, not just in terms of time-based productivity and quality which are presently used, but also in other terms, like human-AI contribution, human learning potential, and process satisfaction. But to do this, it is important to first understand human and large language models in their similarities, differences and complementarities.

I really like the reading of the LLM's responses you do in the early part of the essay. It nicely illustrates how the computational "thinking" an LLM does is quite different from the way a human thinks about using language. This difference is hard to get across, and you do a good job of it here.